/

Dec 19, 2025

The Urgent Need for Enterprise AI Guardrails

Discover why guardrails are essential for managing AI in organizations. Learn how private AI systems enforce policy through architecture, not user compliance.

/

AUTHOR

Subduxion

AI has quietly permeated the workplace, not primarily through formal corporate deployment, but via individual employees leveraging public AI tools for daily tasks. From drafting customer correspondence and summarizing internal documents to processing contract language and financial data, sensitive company information is routinely fed into systems residing outside your organization's security perimeter, access controls, and audit infrastructure.

This isn't mere experimentation; it's uncontrolled system usage: a silent generator of institutional risk that acceptable use policies alone cannot rein in.

The Problem Isn't Behavioral, It's Structural

Public AI tools, while remarkable for their accessibility, are designed for broad consumer use. Their optimization leans towards ease of use, not stringent enterprise data governance frameworks. They inherently lack critical features for organizational security and compliance:

Context-specific data handling rules: No enforcement of what data can be processed

Integration with identity and access management (IAM) systems: No role-based access

Enterprise-grade security controls: Operating outside your trusted environment

Auditable trails: Cannot meet regulatory or internal documentation requirements

While these tools are invaluable for research, ideation, and exploration, they are fundamentally unsuited for workflows involving sensitive data, regulated decision-making, or operations demanding institutional accountability. The UK government's "Mitigating ‘Hidden’ AI Risks Toolkit" highlights that many AI risks stem from mundane, organizational factors and human interaction with tools, underscoring the need for a foundational framework beyond just technical safeguards.

This crucial distinction drives the operational necessity of moving from public AI tools to private AI systems.

Private AI: Defining System-Level Control, Beyond Model Ownership

A private AI system fundamentally operates within your organization's security boundary. It enforces policy through architecture, rather than solely through user compliance. Crucially, it integrates seamlessly with your existing governance infrastructure: identity management, role-based access controls, data classification systems, and comprehensive audit logging.

Most critically, it implements guardrails.

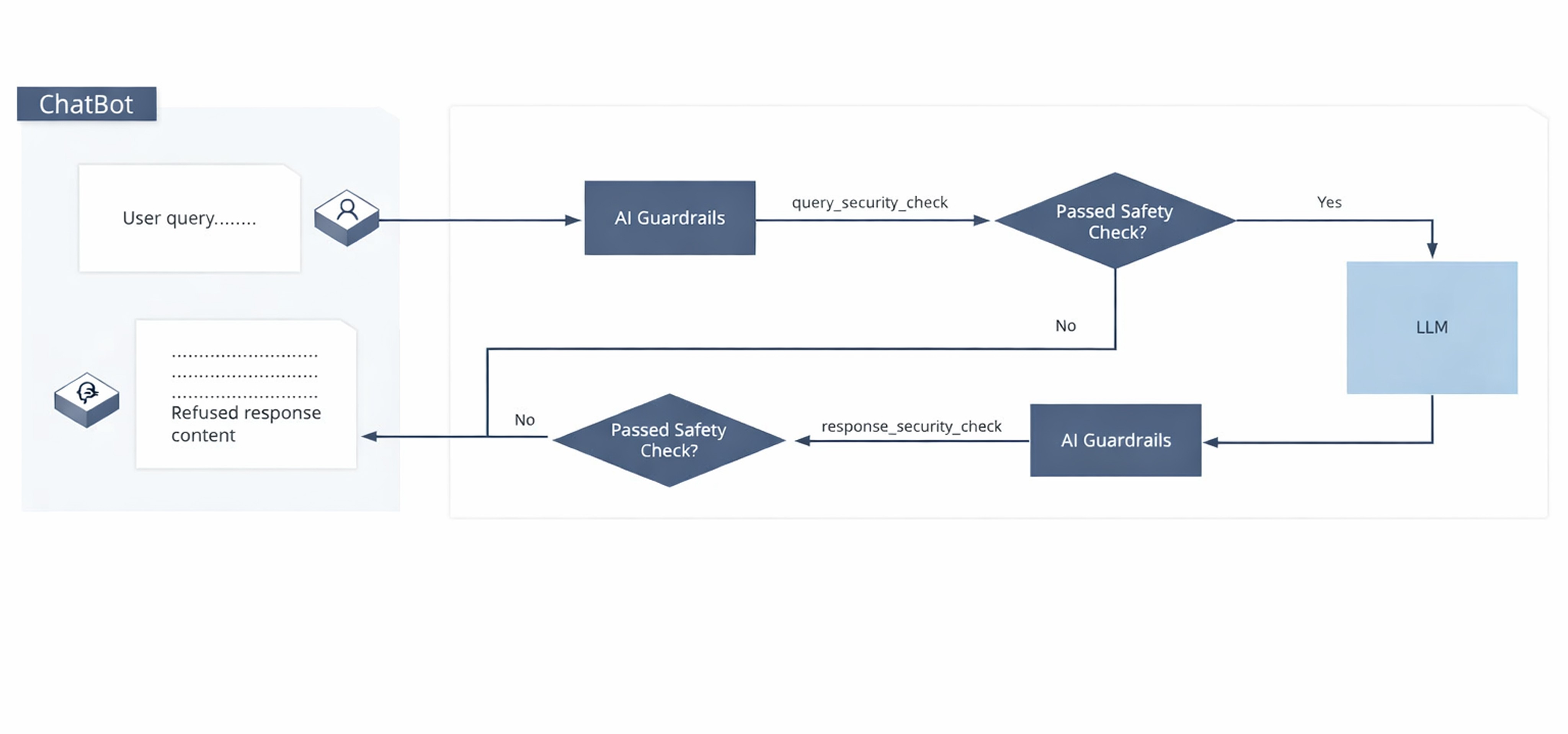

Guardrails: Hard Enforcement, Not Soft Guidelines

Guardrails are built-in, system-level checks that operate independently of user input or intention. They function as critical inspection layers throughout the AI interaction:

Pre-processing Checks:

What data is being submitted?

Is it classified as sensitive or restricted?

Is this specific use case permitted for this user role in this context?

Does the request comply with applicable data handling requirements (e.g., data minimization)?

Post-processing Checks:

Does the AI output contain information that should not be disclosed in this format?

Does it imply a decision, assessment, or recommendation that requires documented human review?

Does it meet defined quality and compliance thresholds?

If any check fails, the system programmatically blocks the interaction entirely or routes it for mandatory human review. The decision is programmatic, not discretionary. This isn't just endpoint security or content filtering; it's robust workflow governance applied precisely to AI interactions. As GitHub emphasizes, clear, practical policies and a framework for tiered tool usage build the trust necessary for safe AI adoption.

Why This Matters: Real Organizational Scenarios

These everyday examples highlight the urgency:

Human Resources: A team uploads candidate profiles to expedite screening, inadvertently exposing protected personal characteristics to a public AI service lacking specific controls for PII. The "hidden risk" here is unintended data exposure due to a lack of structural enforcement (gov.uk).

Legal Department: AI is used to extract obligations from a draft contract, failing to recognize that the contract's confidentiality prohibits external processing.

Finance Department: An AI is asked to summarize quarterly results for internal communication, inadvertently disclosing non-public financial information in the output.

Compliance Officer: AI drafts a regulatory response, unaware that the response may be interpreted as a formal position requiring documented human oversight and approval.

In each instance, the risk isn't malicious intent; it's a structural gap - the absence of system-level enforcement aligned with the organization's existing risk and compliance frameworks.

A Governance Requirement, Not a Technology Choice

The architecture of guardrail-based private AI aligns perfectly with established AI governance principles recognized globally, including those outlined by Microsoft for responsible AI practices:

Data Minimization: Only data necessary for the specific task is processed.

Purpose Limitation: AI usage is strictly constrained to defined, authorized use cases.

Human Oversight: Critical decisions remain subject to documented human review and intervention.

Accountability: Every interaction is logged, traceable, and fully auditable.

Organizations aren't mandated to adopt specific models or vendors. Rather, they are required to ensure that AI usage within their operations is governed with the same rigor applied to other sensitive operational systems. The Microsoft Frontier Governance Framework, for example, emphasizes security measures, safety mitigations, and monitoring as essential precursors to safe and trustworthy AI use, especially for advanced models.

Without these robust AI guardrails, AI remains an uncontrolled capability - a digital "shadow IT" that introduces unpredictable liabilities. With guardrails, it transforms into a governed system.

This isn't a mere technical distinction; it's an institutional imperative.

The real question isn't whether your organization will use AI. It's whether that usage will be governed in a way that aligns with how you already manage access control, document classification, decision logging, and third-party data flows. Private AI systems make this essential alignment architecturally enforceable, establishing boundaries through system design, regardless of individual user awareness or intent. This is the bedrock of operational AI governance.